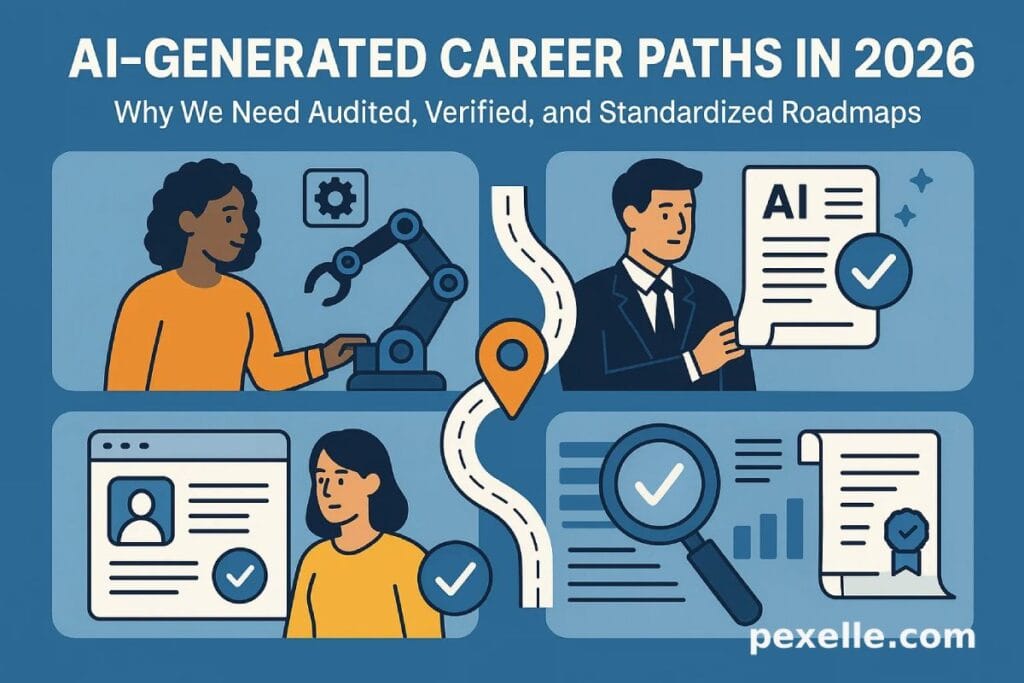

AI-Generated Career Paths in 2026: Why We Need Audited, Verified, and Standardized Roadmaps

By 2026, career development has taken a dramatic turn. Instead of human mentors, HR specialists, or learning designers crafting multi-year job trajectories, Large Language Models now generate them in seconds. These AI-generated career paths promise personalization, speed, and global scalability. But beneath the hype lies an uncomfortable truth: the majority of these paths are polluted with fabricated skills, unrealistic timelines, and non-existent requirements. And unless we build proper auditing and validation layers, the next generation of workers will be guided by systems that don’t understand the real world of work.

1. The Rise of AI-Designed Career Paths

The logic behind AI-generated career paths is straightforward:

massive labor-market datasets + predictive modeling + LLM reasoning = automated 3-year skill roadmap.

On paper, this is revolutionary. Instead of generic advice like “learn JavaScript,” an AI career agent can produce a precise sequence:

- Month 1–3: foundational programming

- Month 4–8: project-based full-stack development

- Year 2: cloud specialization

- Year 3: transition into DevOps or MLOps

But AI systems don’t just predict they invent. They fill gaps in the data, hallucinate requirements, and invent “skills” or “milestones” that no employer has heard of. This makes the career path look detailed and authoritative while completely misaligned with real hiring standards.

2. The Hidden Problem: Skill Hallucinations and Unrealistic Transitions

Hallucination is not just a chat problem. It’s a structural flaw in AI-career modeling.

In 2026, LLMs often:

- Generate skills that don’t exist in ESCO, O*NET, or industry frameworks

- Misinterpret job titles (“AI Engineer” becomes “Quantum Modeler”)

- Suggest impossible transitions (“Junior UI Designer → Cloud Security Engineer in 6 months”)

- Overestimate job availability and salary ranges

- Combine skills from unrelated fields (e.g., DevOps + UX + Legal Compliance)

For individuals who trust AI as a neutral advisor, these errors are dangerous.

A single hallucinated skill can waste six months of learning.

A wrong transition can derail a career.

A fake requirement can discourage qualified candidates.

Without auditing, AI career paths become high-confidence misinformation.

3. Why AI Career Paths Must Be Audited

An AI-generated career roadmap is a chain of dependencies. If even one skill link is wrong, the entire chain collapses.

Auditing is not optional it’s essential because:

• Industry standards change faster than model updates

AI models trained on 2023 data don’t reflect 2026 market shifts.

• Job descriptions are increasingly machine-generated

By 2026, over 60% of JDs come from LLMs.

This means AI is training on AI’s mistakes a feedback loop of error.

• Skill requirements are often misaligned across companies

AI might average contradictory data, producing impossible paths.

• Some AI systems push “popular” paths instead of accurate ones

Recommendation algorithms tend to over-promote trending fields.

• Employers need verifiable, machine-readable skill standards

Human-readable paths are not enough; they must be structurally valid.

The auditing layer acts as the immune system for AI career guidance.

4. What an Audited Career Path Should Look Like

A valid AI-generated roadmap must satisfy 5 conditions:

- Skills must exist in verified taxonomies

(ESCO, O*NET, SFIA, vendor certifications) - The sequence must follow real-world learning dependencies

No “learn Kubernetes before Linux” nonsense. - The timeline must account for human cognitive skill acquisition

3-week sprints are not realistic for advanced competencies. - Job transitions must be statistically grounded

Based on actual hiring data, not imagination. - The entire chain must be machine-auditable

So future AIs can build on verified data, not fabricated paths.

Anything less is unacceptable.

5. The Role of Pexelle: Building the Skills Validation Layer for AI

This is where platforms like Pexelle become necessary infrastructure.

AI shouldn’t operate blind. It needs a standardized, validated, cryptographically verifiable skills registry to anchor its outputs.

Pexelle can provide three critical components:

1) Verified Skills Graph

A knowledge graph built from ESCO, O*NET, certifications, and real job data

ensuring AI cannot hallucinate skills or dependencies.

2) AI Auditing Engine

A system that scans AI-generated paths and checks:

- skill validity

- dependency chain integrity

- transition feasibility

- market relevance

- time realism

This turns Pexelle into the “AI Career Path Quality Gate.”

3) Proof-of-Skill (PoSK) Layer

Every recommended skill can be linked to:

- verified courses

- validated evidence

- performance tasks

- peer-reviewed artifacts

- blockchain-backed certificates

This closes the loop between learning and hiring.

Pexelle’s role is not to replace AI career systems it’s to correct them, standardize them, and protect individuals from misinformation.

6. The Future: Standardized, Machine-Audited AI Career Paths

By 2026–2030, every major AI career platform will need:

- standardized skills

- machine-audited sequences

- verified learning pathways

- transparent competency frameworks

- cryptographic proof of achievement

The market will shift from “AI-generated paths” to AI-governed, human-safe, standardized paths.

And the platforms that provide the validation backbone the “Skill Safety Layer” will define the future of JobTech.

This is where Pexelle can lead globally.

Source : Medium.com